Array programming, part 2

In this post, I want to go over the main features of array programming languages. I’m going to concentrate on the “Iversonian” languages i.e. those directly descended from APL, as these are the most “pure” array languages. However, many of these features are shared by other languages, as we’ll see.

Multidimensional rectangular arrays as the fundamental datatype

Array programming languages have arrays as the fundamental datatype. How fundamental? Even scalar values like integers are considered to be arrays (they are “rank zero” arrays). Furthermore, these languages always allow arrays of arbitrary dimension. Most array languages represent arrays internally as a one-dimensional sequence of values with additional data to indicate the size along each dimension. This means that these arrays are constrained to be hyperrectangular, which basically means that they aren’t “ragged”; for instance, each row in a matrix (array of rank 2) has the same number of columns.

Since there are many situations where hyperrectangular arrays are too rigid to represent particular kinds of data in a natural way, array languages also allow “boxing”, an operation which takes an array and converts it into a “scalar” value, which internally would be represented by a pointer to the data. You can also “unbox” boxed data to retrieve the original array. Here’s what this looks like in J:

< 1 2 3 NB. Box an array.

+-----+

|1 2 3|

+-----+

a =: < 1 2 3

a

+-----+

|1 2 3|

+-----+

>a NB. Unbox a boxed array.

1 2 3

The < operator “boxes” the array 1 2 3 into a single scalar value,

while the > operator “unboxes” it.

(The output is printed out in a stylized way to show the boxing.)

< operator, like almost all J operators, has two meanings.

The “dyadic” (two argument) meaning is the familiar less-than operation.

The “monadic” (one argument) meaning is boxing.The ; operator used “dyadically” (as a two-argument operator)

will box both its arguments and make or extend an array of boxes:

1 2 3 ; 4 5

+-----+---+

|1 2 3|4 5|

+-----+---+

1 2 3 ; 4 5 ; 6

+-----+---+-+

|1 2 3|4 5|6|

+-----+---+-+

] a =: 1 2 3 ; 4 5 ; 6

+-----+---+-+

|1 2 3|4 5|6|

+-----+---+-+

> a

1 2 3

4 5 0

6 0 0

(I slipped in the ] operator above in the second-last input line;

this allowed me to assign the ragged array 1 2 3 ; 4 5 ; 6 to a

and display it after assignment.)

Note that when unboxing a ragged array,

a rectangular array is created;

the extra spaces get filled with a “fill value”,

which is 0 by default for integers.

Even strings are represented as arrays of characters. J uses the single-quote character as a string delimiter:

'this is a string'

this is a string

s =: 'this is a string'

$ s

16

The $ operator, used “monadically” (as a prefix operator)

gives the array dimensions;

s is a one-dimensional array of characters of length 16.

Boxing can be used to create dictionary-like structures:

3 2 $ 'foo' ; 1 ; 'bar' ; 2 ; 'baz' ; 3

+---+-+

|foo|1|

+---+-+

|bar|2|

+---+-+

|baz|3|

+---+-+

In theory, you can represent any kind of compound data using arrays. In practice, it’s not always going to be the most efficient choice; for instance, an array of key-value pairs is not going to outperform a hash table. Some array languages (e.g. K and APL) provide ways to represent tabular data more efficiently as a built-in special-purpose data structure.

Of course, lots of non-array languages (actually, pretty much all languages) are perfectly capable of representing multidimensional arrays, (though they don’t use arrays as the universal data structure). So this is not nearly enough to qualify a language as an “array language”; something more fundamental is needed.

That something is rank polymorphism.

Rank polymorphism

In programming, “polymorphism” (which is Latin for “many shapes”) refers to something (often a function) that can be used on many kinds of data. In functional programming, one kind of polymorphism is represented by a function that can take (say) a list containing any type of items as its argument. In object-oriented programming, there are methods that can act on either an instance of a class or an instance of any subclass of a class, which is a different kind of polymorphism.

Array languages have yet another kind of polymorphism,

usually referred to as rank polymorphism.

This refers to the ability of functions and operators to act on arrays

regardless of their rank.

The rank of an array is the number of dimensions in the array.

So, for instance, the addition operator (+)

can add two scalar (rank 0) numbers,

two one-dimensional vectors (rank 1),

two two-dimensional matrices (rank 2),

and so on up to arbitrary higher dimensions.

1 + 2 NB. rank 0 addition

3

1 2 3 + 4 5 6 NB. rank 1 addition

5 7 9

]a =: i. 2 3

0 1 2

3 4 5

]b =: 2 3 $ |. i. 6 NB. `|.` reverses an array

5 4 3

2 1 0

a + b NB. rank 2 addition

5 5 5

5 5 5

What rank polymorphism buys you is that

you don’t have to write explicit loops to manipulate arrays.

Conceptually, it lets you think at the level of the entire arrays

instead of in terms of their components.

Functional languages get close to this with map functions,

but they are still much less convenient than array languages,

which do the mapping automatically.

Prefix agreement

Of course, it’s not always as simple as in the examples I just showed. If the two arrays have the same ranks but different shapes, they can’t be added element-by-element:

1 2 3 + 4 5 6 7

|length error

| 1 2 3 +4 5 6 7

But if they have different ranks, in many cases they can be added:

1 + 2 3 4 NB. scalar + vector

3 4 5

i. 2 3 NB. 2x3 matrix

0 1 2

3 4 5

1 2 + i. 2 3 NB. vector + matrix

1 2 3

5 6 7

Adding a scalar to an array just adds the scalar to each element. Effectively, the scalar is “expanded” to match the dimensions of the array it will be added to.

Adding a vector to a matrix only works if

the number of elements in the vector

is the same as the number of rows in the matrix.

More generally, the dimensions of the lower-rank array

(in this case, 2 for the array 1 2)

must be a prefix of the dimensions of the higher-rank array

(in this case 2 3 for i. 2 3).

This is called prefix agreement and is very important.

It means that you don’t have to manually expand the arrays

so that their shapes match (although that is also possible),

which makes rank polymorphism easier to use.

If the prefixes match,

the array with fewer elements is expanded to match the dimensions

of the other operand.

In this case, the array 1 2 is expanded to be

1 1 1

2 2 2

before being added to i. 2 3.

So 1 is added to the first row and 2 to the second row.

Python with the NumPy library also supports rank-polymorphic operations:

>>> from numpy import *

>>> a = array([[0, 1, 2], [3, 4, 5]])

>>> b = array([1, 2])

>>> b = array([1, 2])

>>> b + a

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ValueError: operands could not be broadcast together with shapes (2,) (2,3)

>>> c = array([1, 2, 3])

>>> c + a

array([[1, 3, 5],

[4, 6, 8]])

Curiously, NumPy uses postfix agreement rather than prefix.

Function and operator rank

Not all functions operate element-by-element on multidimensional arrays.

Consider “ravel”, written as ,,

which flattens any array into a one-dimensional array:

i. 2

0 1

, i. 2

0 1

i. 2 2

0 1

2 3

, i. 2 2

0 1 2 3

i. 2 2 2

0 1

2 3

4 5

6 7

, i. 2 2 2

0 1 2 3 4 5 6 7

Clearly, ravel acts on the entire array, not on its components.

We say it has “rank infinity”,

as opposed to +, which has “rank zero”,

since + acts on individual elements of arrays.

Most J verbs (functions) have ranks 0 or infinity,

but some have ranks 1, 2 or even negative ranks.

Two-argument operators can also have different ranks for each argument.

There are a lot of subtleties with rank polymorphism.

Sometimes you need to change the rank of an operator

to achieve the result you want;

this is done with the rank conjunction (written " in J).

Here’s an example:

i. 2 3

0 1 2

3 4 5

+/ i. 2 3 NB. sum rows: 0 1 2 + 3 4 5

3 5 7

+/"1 i. 2 3 NB. sum columns: 0 3 + 1 4 + 2 5

3 12

First off, the language has to be able to figure out the function rank

not only of built-in operators (like +)

but also of operators modified by “adverbs” (higher-order functions)

like the / fold operator.

And then you have to be able to change it again using the " conjunction

to get the particular kind of summation you might want.

The term “operator” is problematic when talking about array languages.

Most languages use “operators” to mean symbols representing

(usually) built-in two-argument functions like +, -, etc.

Array languages generally use the term “operator”

in the mathematical sense to refer to a function modifier

i.e. a higher-order function which changes the meaning of another function.

Perhaps to avoid this kind of confusion, the J language uses the term “verb” to denote a function (whether a user-defined name or a built-in symbol), “adverb” to denote a higher-order function which takes a single function as its argument, and “conjunction” to denote a higher-order function which takes two functions as arguments. J also uses “noun” to denote non-function data.

The syntax isn’t trivial either; +/"1 is parsed as ((+/)"1),

because adverbs like / are applied to their left arguments

and have higher precedence than conjunctions like ",

which are applied to their left and right arguments.

So let’s talk about syntax.

Syntax

The first thing people notice about programming languages is their syntax. This is triply true for array languages. There’s no way to sugar-coat it: most array languages have weird syntax. This is a big reason why they have never achieved great popularity, and, in my opinion, never will. The vast majority of programmers will simply recoil in horror at a very unusual syntax and immediately dismiss the language as “incomprehensible”. Only the most adventurous 1% of programmers can handle something as unusual as the syntax of array languages. So what makes the syntax of array languages so different?

Terseness

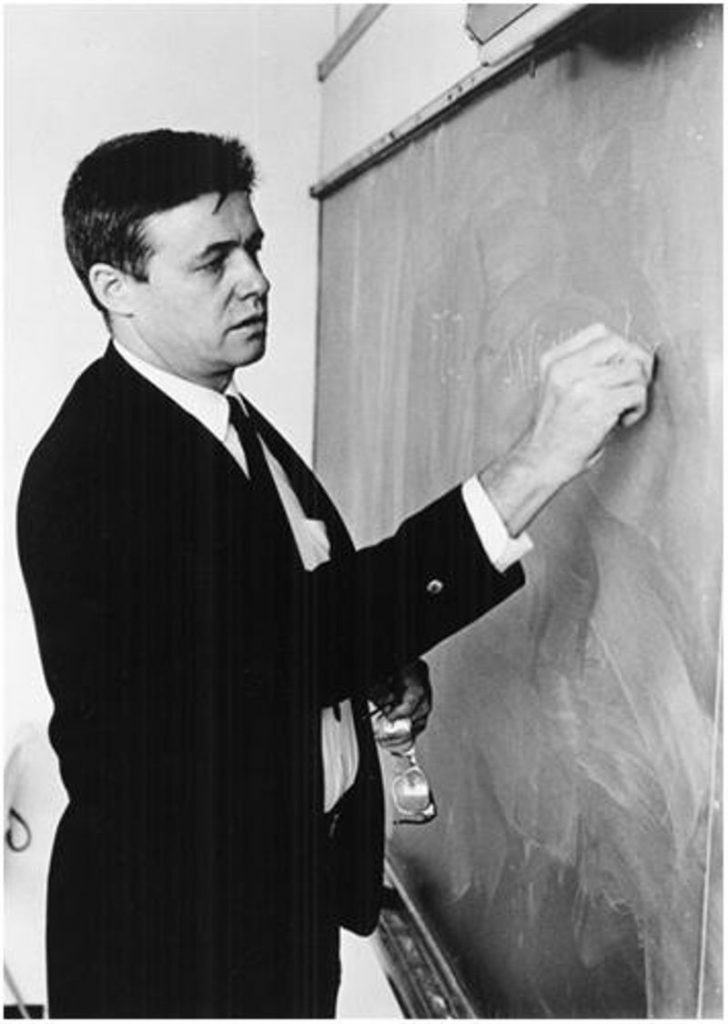

The syntax of most array languages have been very carefully designed to be as expressive as possible while using as few characters as possible. Ken Iverson was obsessed with notation; his Turing Award lecture was called Notation as a Tool of Thought. When confronted about the cryptic nature of his notation, Ken compared it to Chinese: something you can easily understand if you know Chinese, but incomprehensible otherwise. However, programmers are used to their languages having mostly interchangeable syntaxes, and so few are willing to spend enough effort learning an unfamiliar notation to get any benefit out of it.

Consider this example I’ve already shown:

summing a series to get an approximation for e,

the base of natural logarithms. In J it looks like this:

+/%!i.20

In Python with NumPy and SciPy, it looks like this:

sum(1.0/factorial(arange(20)))

That’s probably easier for you to understand, but it’s also more than three times as long. You can write it in a similar way in J too:

sum =: +/

reciprocal =: %

factorial =: !

range =: i.

sum reciprocal factorial range 20

But once you learn the notation, nobody is going to write it like this. So the terseness has value.

It’s important to understand that terse syntax is by no means an essential part of the array programming paradigm. However, starting with APL, most dedicated array languages have embraced extremely terse syntaxes.

Once you’ve learned what the symbols mean, the terseness is a big part of the fun of programming in an array language; you can express so much in so few characters! Some people derisively refer to this as “code golfing”, and it’s true that array languages are incredible code golfing languages. But a different view is that being able to express an entire algorithm in (say) one line of code qualitatively changes the way you write programs. Since you can write a program so quickly, you can also write multiple versions of the program quickly, and compare the different versions with respect to various metrics (say, space usage or time efficiency) in less time than it would take a programmer in more conventional languages to get a single working version. In more verbose languages, getting a single version working will be so much work that you will generally not want to write a second version. But in array languages, people do this all the time, because it’s so easy.

The downside, of course, is that the terse notation makes it much harder to read someone else’s code unless you are an expert. Array languages are often called “write-only” for this reason. The best-written array code I’ve seen mitigates this by keeping definitions short (in the “seven symbols, plus or minus two” sense). In contrast, code golfers love to build up a long, incomprehensible line of symbols to show how a program can be written in a single line. That’s a fun game, but it isn’t good array programming. I’ll show you some examples of both styles later.

Primitives

Because there are so many basic operations that can be done on multidimensional arrays (arithmetic operations, reshaping, reversing, concatenating, etc.), array languages have a large number of primitive symbols (what other languages call “operators”) that have predefined meanings.

Array languages typically have in the range of 50-100 special symbols representing built-in functions. (K has a much smaller number.)

Precedence

You can imagine that having complicated precedence rules in the presence of so many operators would simply be infeasible. In fact, these languages have a very simple precedence rule: a function applies to its entire argument to the right. This means that some expressions are going to parse very differently from what you might expect. For instance:

2 * 3 + 4

14

You probably expected the result 10,

but the precedence rule makes the above line parse as 2 * (3 + 4).

On the other hand:

3 + 4 * 2

11

This is parsed as 3 + (4 * 2).

So there’s no PEMDAS rule here!

The precedence rule is necessary to keep things simple in the presence of large numbers of symbolic operators, but this is yet another way that array languages deviate wildly from the expectations of most programmers. But we’re not done yet!

Symbols or digraphs

I’ve been using the

J language

for most of my examples.

This softens the blow of learning array languages somewhat,

despite the “line noise” feel of the syntax,

since J restricts itself to the keyboard-typeable ASCII character set.

But the original array language APL did not restrict itself in this way.

(To be fair,

the language was originally designed as a “chalkboard” notation

rather than as a programming language.)

Instead, it used one-character symbols for its primitives.

Some of these characters are familiar keyboard characters, like +.

Some are familiar from grade school arithmetic,

but absent from keyboards, like ×,÷, ≤, and ≠.

Negative numbers were written with a “high minus”

(for instance, ¯1) to avoid confusion with the - subtraction operator.

Some characters are Greek letters,

such as ⍴ (rho, or “reshape”) and ⍳ (iota, or “index-of”).

And many are simply made up, such as

⌈ (maximum), ⌊ (minimum), ↑ (take), ↓ (drop), ⌽ (rotate),

and many more.

Combinations of these characters give APL code a very alien appearance, but since you can express complex operations in single characters, it’s also very powerful (and kind of beautiful too). The downside (apart from the unfamiliarity) is that these characters aren’t available on normal keyboards, so APL interpreters (and editors) have to both support a custom font and have a way of entering characters in that font.

Even Ken Iverson must have realized that the APL symbols

were holding the language back,

because when he decided to revamp the language to form J,

he abandoned the symbols entirely.

Instead, he used one- or two-character sequences (“digraphs”)

with a particular structure.

For every “base symbol” such as +,

two additional digraph symbols (+. and +:) were used.

(Actually, you can add more . and : characters at the end,

and some J symbols do this.)

Ideally, the three symbols

(the base symbol,

the symbol with a . suffix,

and the symbol with a : suffix)

will have related meanings, though this isn’t always the case.

This gives J a pretty large symbol palette;

the ASCII character set has 32 symbolic characters;

even taking out ( and ) for their usual grouping function,

that leaves 30 characters, and with three options for each,

you get 90 possible symbols.

To this, J adds alphanumeric symbols

which consist of a letter or a digit followed by a . or :.

So you can easily encode equivalents to the APL symbols

in an ASCII representation.

The downside of this, of course, is that the code ends up looking like line noise. But if you can get past this, it’s reasonably convenient to work with. If you’re curious, you can see the entire J vocabulary here.

“Monadic” and “dyadic” meanings

But wait, there’s more! You can squeeze out even more meaning from the symbol set if you overload each symbol to mean different things when used as a two-argument operator or a one-argument prefix operator (there are no postfix operators). And, in fact, most array languages do this.

The documentation of most array languages

refer to the “monadic” and “dyadic” meanings of a symbol.

These words simply mean the one-argument and two-argument forms

of the symbol;

there’s no connection to “monads” in the Haskell or category theory sense.

For instance, the % operator means “division”

if used as a two-argument (dyadic) operator,

and “reciprocal” if used as a one-argument (monadic) prefix operator.

(Why % and not /?

Because / has a long history in array languages as the

“fold right” or “insert” higher-order function.)

2 % 3 NB. division

0.666667

% 3 NB. reciprocal

0.333333

Of course, the reciprocal operator is unnecessary;

you could also write 1 % 3.

But having a dedicated reciprocal operator is nice!

In general, the J vocabulary tries to make the monadic and dyadic meanings

of an operator related when possible.

It’s not always obvious when a symbol is going to be used as a monadic operation or a dyadic one, but if there is any doubt, you can always force the interpretation you want by judicious use of parentheses.

Syntactic roles

And again, we’re not done! Array languages support multiple different kinds of “syntactic roles”. Using J as an example, we have:

- Noun

- Non-function data

- Verb

- Function that operates on nouns: one (monadic) or two (dyadic)

- Adverb

- Higher-order function that modifies one verb

- Conjunction

- Higher-order function that modifies two verbs

These roles affect the syntax. The precedence rule given above applies to verbs: a verb is applied to the entire expression to its right. However, an adverb is applied to the verb (function) to its left. Here’s a simple example:

+/ 1 2 3

6

The “insert” (fold right) adverb /

applies to the verb to its immediate left

(here, the + (addition) verb).

This particular adverb takes the verb

and puts it between successive array elements.

So this example is the same as 1 + 2 + 3.

Similarly, we have:

>./ 1 2 3 NB. maximum of 1 2 3

3

<./ 1 2 3 NB. minimum of 1 2 3

1

*/ 1 2 3 NB. product of 1 2 3

6

Here’s a slightly more complicated example:

+/ i. 5 + 5

45

This computes 5 + 5 (10),

creates an array of integers from 0 up to but not including 10 (i. 10),

then sums them up to get 45.

You can have multiple consecutive adverbs:

+/\ 1 2 3 4 5

1 3 6 10 15

The backslash (\) is called “prefix” and is an adverb,

therefore it applies to the verb on its left.

This verb is the +/ summation verb,

which is itself the combination of the + verb and the / adverb.

So the expression +/\ 1 2 3 4 5 parses as ((+/)\) 1 2 3 4 5.

The prefix adverb (\) applies the verb (here, +/)

to successive prefixes of the array operand (here, 1 2 3 4 5),

so you get (+/ 1, +/ 1 2, +/ 1 2 3, +/ 1 2 3 4, and +/ 1 2 3 4 5)

collected into the single array 1 3 6 10 15.

A functional language would write this something like this:

prefix (foldr1 (+)) [1, 2, 3, 4, 5]

Maybe this is easier to understand, but the J code is much more concise, as usual.

Finally, conjunctions combine two verbs, or a verb and a noun,

one on either side.

The & (bond) conjunction combines a verb with a noun,

so we can have 2&+ or +&2 to bind one argument of the + operator

(Haskell fans will recognize this as equivalent to operator sections).

(2&+) 10 NB. parentheses optional

12

(+&2) 10 NB. parentheses not optional

12

The parentheses are not optional in the last example,

because without them, the 2 10 would be considered to be a single array.

The @: (atop) conjunction binds two verbs to form

the composition of the two verbs:

(2&*)@:(+/) 1 2 3 4 5

30

Here, the parentheses are not optional.

Adverbs bind more tightly than conjunctions,

but adverbs also modify the entire verb to their left

so the expression 2&*@:+/ 1 2 3 4 5

would parse as ((2&*)@:+)/ 1 2 3 4 5,

which evaluates to 178.

(The verb (2&*)@:+ is 2*(x+y)

if x is the left operand and y the right.)

I think it’s interesting that functions (verbs) are allowed to have both a monadic (one argument) and a dyadic (two argument) interpretation, but higher-order functions have to be one or the other: a one-argument higher-order function is an adverb, a two-argument higher-order function is a conjunction, and each parses differently.

Implicit composition: trains

If I told you that we still weren’t done yet, would you be surprised? At this point, I highly doubt it. But in a way, I’ve saved the coolest thing for last.

After a lot of experience with APL (J’s predecessor), it became obvious that many syntactic forms had no meaning. For instance, if you juxtapose two or three verbs (functions) next to each other, what does that mean? If the verbs are all applied to a noun, you can understand it as applying each function to the result of the previous function application:

NB. +: is double, *: is square, %: is square root

%: +: *: 1 2 3

1.41421 2.82843 4.24264

This gets parsed as (%: (+: (*: 1 2 3))).

But what would (%: +: *:) 1 2 3 mean?

There is nothing in the syntax I’ve described so far

that gives meaning to functions (verbs) applied to other functions.

The only thing we can apply to functions are higher-order functions

(adverbs and conjunctions),

which are considered to be separate entities.

Recognizing this, Ken Iverson and his colleagues

(including at least E. E. McDonnell and Arthur Whitney,

and no doubt others),

decided that it would be a good idea to assign special meanings

to these combinations of verbs.

This was inspired by mathematics,

where if you have a function f and a function g,

then (f + g)(x) has the natural definition of f(x) + g(x).

Since f + g is a “train” of three functions (verbs),

in J it gets the same meaning it does in math.

(J calls this a “fork”).

The actual rules are these:

NB. f and h are monadic operations; g is dyadic:

(f g h) x = (f x) g (h x)

NB. f, g, and h are all dyadic:

x (f g h) y = (x f y) g (x h y)

We are putting dyadic operations between their arguments, as usual. This allows us to write extremely concise definitions. Here’s one for the average of an array:

avg =: +/ % #

The +/ combination sums the array,

the # (tally) verb computes the length of the array,

and the % (divide) verb divides the sum by the length to get the average.

There is also a two-argument form called a “hook” with this definition:

(f g) x = x f (g x) NB. monadic hook

x (f g) y = x f (g y) NB. dyadic hook

An example would be adding a number to its reciprocal:

+% 5

5.2

Longer trains of verbs are parsed from the right to the left, taking as many forks as possible:

V1 V2 V3 V4 V5 --> V1 V2 [V3 V4 V5]

V1 V2 V3 V4 V5 V6 --> {V1 [V2 V3 [V4 V5 V6]}

Here, I’m using the made-up notation [v1 v2 v3] for a fork

and {v1 v2} for a hook.

So all trains of verbs mean something.

Trains don’t just work for verbs; there is a complex set of rules for trains involving nouns, verbs, adverbs and conjunctions; these rules are spelled out here. They were invented by Ken Iverson (of course), were implemented in early versions of J, were removed from the language for a long time as being “too complicated”, but were recently added back.

Once again, you can see how the syntax (of J, at least, but also of other array languages) has been tweaked to give the maximum expressiveness with the fewest characters, even when doing so requires fairly complex parsing rules.

Other aspects of syntax

I’ve covered what I consider to be the interesting aspects of array language syntax. There’s a lot more that I haven’t mentioned. For instance, you can define functions in an imperative style with fairly conventional control-flow operators and sequences of statements. You can also define anonymous functions, though they may not be proper closures, depending on the language.

Limitations of array language syntaxes

I admire the syntaxes of array languages like APL and J, but I have some reservations about them too. I’m not particularly bothered by the notational oddity of the symbols; you can always define word equivalents for any symbol you don’t want to use:

sqrt =: %:

sqrt 2

1.41421

But there are more fundamental limitations that I don’t like.

Functions with more than two arguments

APL and J have been designed to work naturally with either binary operators (operands on both sides) or unary prefix operators (operand on the right side only). Many functions require more than two operators, and some require no operators.

For no operators, you can pass in a dummy value representing an empty array. Or you could pass in an arbitrary value and simply not use it. Both approaches feel kludgy. On the other hand, functional languages like OCaml and Haskell have a similar limitation, and use a dedicated unit value for this purpose. So this isn’t a big deal.

For more than two operands, there are two cases:

- one or more of the operands are the same type and rank;

- everything else.

In case 1, you can simply pack the similar operands into a larger array.

For case 2, the most straightforward way to handle it

is to create a “boxed array” containing all the arguments.

Boxes are essentially reference cells,

so an array of boxes can contain anything.

J makes this easy with the box constructor primitive ;:

'foo' ; 1 2 3 ; (i. 2 3) NB. string, rank-1 array, rank-2 array

+---+-----+-----+

|foo|1 2 3|0 1 2|

| | |3 4 5|

+---+-----+-----+

You could pass this array to a function as its right operand, and the individual elements (the “real” operands) could be unpacked from it. But this is not a very elegant solution.

My understanding is that K supports true multi-argument functions.

Functions aren’t data

Ken Iverson didn’t like the idea of functions being considered as just another form of data; he felt that a stratification of levels (data to functions to higher-order functions) was the right way to think about programming. Therefore, Iversonian array languages like APL and J cannot be true functional programming languages.

One place where this bites you is that you can’t naively create

an array of functions.

Ironically, the @. (agenda) conjunction in J,

used to implement conditional expressions,

requires a list of functions as its rightmost operand.

J uses a syntactic kludge (in my opinion) to get around this;

functions connected with a ` (tie) operator effectively form

a list of functions.

Iverson referred to this as a “gerund”,

which means a verb acting as a noun (which makes sense).

So +`-`* is a gerund containing three functions.

However, as far as I know the functions are stored in their literal form

and need to be converted back to a real function

in order to be applied to arguments.

This is much less elegant than simply

allowing functions to be constituents of arrays.

(The Remora language mentioned above allows this,

because functions in Remora are considered to be scalar values

which can be constituents of arrays.)

Higher-order functions

On the one hand, array languages hold higher-order functions in so much esteem that they give them distinct syntactic roles. On the other hand, higher-order functions are restricted to being “second-order” i.e. applying only to regular (first-order) functions. This is in sharp contrast to modern functional languages (Scheme, OCaml, Haskell and many others) which allow arbitrarily high-order functions. So, for instance, in a functional language you could define a function which returns a higher-order function acting on first-order functions (this would be a third-order function). To the best of my knowledge, this isn’t possible in APL or J (I’m not sure about K).

The effect of this is that if you want (say) a function which evaluates to a conjunction in J, you are either out of luck or have to resort to some kind of on-the-fly code evaluation mechanism.

This limitation is by no means fundamental; the Remora language mentioned above is a true functional language which allows arbitrarily high-order functions. As far as I can see, the only purpose of this restriction is to facilitate the kind of ultra-concise syntax that array languages are (in)famous for. I’m not sure that this is the best tradeoff.

Anonymous functions

Most array languages have anonymous functions, but they are quite restricted compared to what you find in functional languages. They aren’t true closures, and to the best of my knowledge, you can’t return a function from an anonymous function at all (again, unless you return a text representation of it and evaluate it later). This significantly reduces the expressivity of the languages, and again, there’s no good reason for it other than history and syntax.

Trains

Trains of verbs (in the J sense described above, though they have been incorporated into other array languages) are a uniquely cool feature of array languages. They allow you to write very concise expressions that would require special combinators in functional languages. On the other hand, the rules for trains, and especially the full set of rules for trains which includes adverbs, nouns, and conjunctions as well as verbs, are quite complex and not something that’s easy to memorize. I haven’t worked with this enough to know if memorizing the train rules is necessary or feasible, but I do note that functional languages simply don’t worry about this, since all functions are just values. So if you need a particular way of combining functions in a functional language, you have to define a combinator to do that. That’s certainly a lot simpler than having a large set of implicit rules.

Next time

OK, enough theory! Next time, I want to show some longer examples of code written in array languages, highlighting how different it is to work with these languages versus more conventional languages (or even functional languages!).